RAG 101

Behold! As a part of my minor project, I have decided to go with this.

Well, RAG stands for Retrieval Augmental Generation.

And at its core, it basically is a technique that enables generative artificial intelligence (Gen AI) models to retrieve and imcorporate new information. It modifies interactions with LLMs so that the model responds to user queries with reference to a specified set of documents, using this information to supplement information from its pre-existing training data.

As LLMs often hallucinate or become outdated, so RAG helps.

Fine-tuning vs. RAG comes down to internalization vs. real-time grounding. Fine-tuning updates a model’s weights with task-specific data, useful for static tasks requiring deep reasoning. RAG, on the other hand, retrieves updated content without retraining, offering lower cost and more flexibility. It’s ideal for dynamic, fact-heavy applications, while fine-tuning is better for logic-centric or controlled tasks.

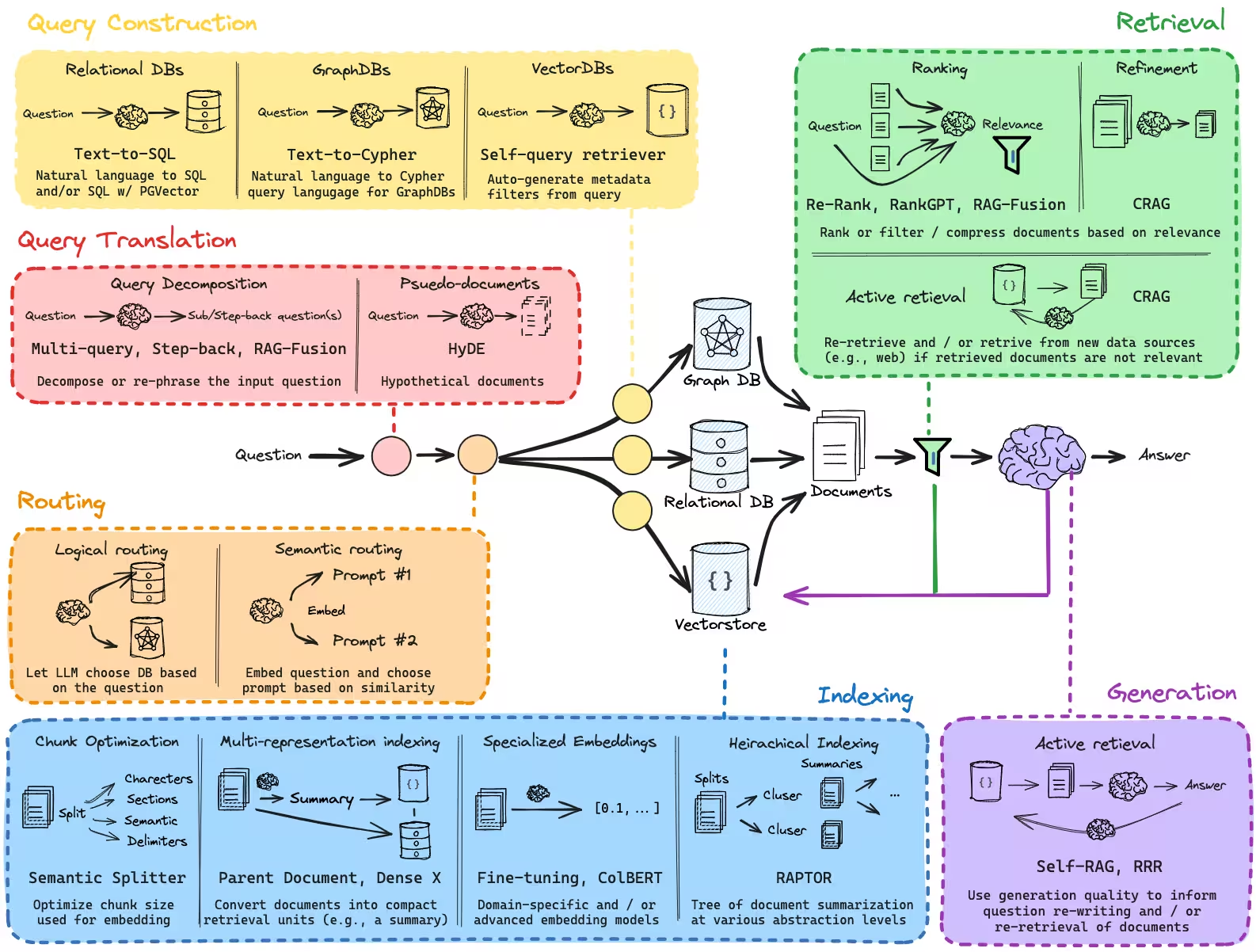

Technically, RAG works in stages: Indexing involves converting reference documents into vector embeddings of words, which are stored in a database for fast retrieval. Retrieval follows, where a query triggers the retrieval of the most relevant documents from the database. In Augmentation, these retrieved documents are combined with the user’s query through prompt engineering, enriching the context. Finally, Generation occurs, where the LLM generates a response using both the augmented input and its internal training data, producing a more accurate, context-aware answer.

RAG is being enhanced with smarter chunking, hybrid retrieval (dense + sparse), and structured knowledge via GraphRAG, which uses knowledge graphs instead of raw text for more precise retrieval. These advances make RAG more interpretable, efficient, and domain-adaptable.

Enough with the theory. Implementation is next.

See you soon.